Hardly a week goes by without a handful of ‘meltdowns’ occurring around the world. Your research shows that these incidents have a shared DNA. Please explain.

András Tilcsik: If you look closely at the various failures we are seeing — from transportation mishaps and industrial accidents to IT meltdowns — their underlying causes are surprisingly similar. The systems that are most vulnerable to such failures — whereby a combination of small glitches and human errors bring down the entire system — share two key characteristics.

The first is complexity, which means that the system itself is not linear: It’s more like an elaborate web — and much of what goes on in these systems is invisible to the naked eye. For example, you can’t just send someone in to figure out what’s going on in a nuclear power plant’s core, any more than you can send someone to the bottom of the sea when you’re drilling for oil. It’s not just large industrial organizations grappling with this issue: Regular businesses are also increasingly complex, with parts that interact in hidden and unexpected ways.

The second characteristic shared by vulnerable systems is tight coupling. This is an Engineering term that indicates a lack of ‘slack’ in a system. It basically means that there is very little margin for error. If something goes wrong, you won’t have much time to figure out what’s happening and make adjustments. You can’t just say, ‘Time out! I’m going to step out of this situation, figure out what’s going on and then go back in and fix it’.

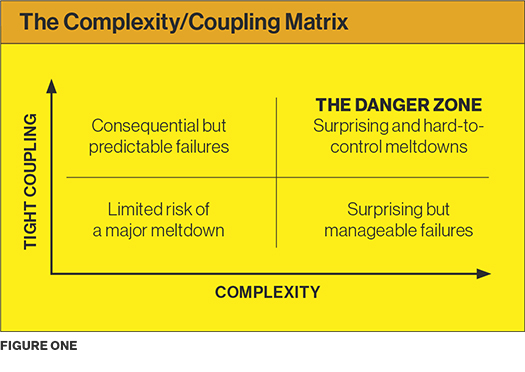

The theory is that when a system features both of these conditions — complexity and tight coupling — it is highly susceptible to surprising and disproportionately-large failures. In a complex system, small errors can come together in surprising ways, leading to confusing symptoms and difficulty in diagnosing the problem in real time. If the system is also tightly coupled, it’s very difficult to stop the falling dominoes. The presence of both complexity and tight coupling pushes you into a danger zone.

This is not our theory, by the way. Sociologist Charles Perrow developed it in the early 1980s — but it is proving to be especially pertinent to our modern systems. When the theory was developed, very few systems were both complex and tightly coupled: exotic, high-risk systems like nuclear power plants, certain military systems, and space missions, for example. Most other systems might have been complex, but not tightly coupled; or tightly coupled, but not all that complex. Take a traditional dam: It was tightly coupled because if it failed, you would have all sorts of terrible consequences downstream. But at the same time, it was not a very complex system. If a gate had to be opened, for example, a dam tender could simply walk up to the dam crest, open a gate and make sure that the right gate was indeed moving. It was a linear system, and most things were visible to the naked eye.

When a system features complexity and tight coupling, it is highly susceptible to disproportionately-large failures.

You have said that some systems that used to be outside of the danger zone have been moving inside of it. How so?

To continue with the dam example, the way dams are organized today has completely changed. There are now dam operators overseeing multiple sites from remote control rooms, where they look at computer screens, click virtual buttons, and rely on sensors installed at far-away dams. In short, they are removed from having direct access to the system.

Another example is post offices, which were traditionally quite simple and loosely coupled. But even that has been changing. In the book, we discuss a massive failure in a post-office setting due to a new IT system, which pushed the entire operation into the danger zone by making it much harder to peek into and much more tightly coupled.

How does a task like ‘preparing Thanksgiving dinner’ fit into the danger zone?

First, let’s look at it from a tight-coupling perspective. Thanksgiving is held on a particular day each year. You don’t have any flexibility around that, so there is inherent time pressure. Further, you only have one turkey to prepare, and most homes have only one oven. So, if you mess things up, there is no easy recovery. You can’t just say, ‘Sorry everyone, please come back next week.’

The meal itself is also quite complex: Different parts of the turkey require more cooking, while others need less; and yet, as in a nuclear power plant, it’s very difficult to directly get a sense of what’s going on inside the system. You have to rely on indirect indicators, like a thermometer. Also, the side dishes depend on one another: Gravy comes from the roasted turkey’s juices, and the stuffing often cooks inside the bird. This combination of complexity and tight coupling sets us up for surprising failures. While researching the book, we heard about some crazy Thanksgiving disasters. One person reported using cough syrup instead of vanilla flavouring in one of her desserts. Without all the time pressure and complexity, such mistakes are far less likely to happen.

In contrast, cooking spaghetti and meat sauce on a weeknight is pretty low on the complexity scale, in part because you can see what you’re doing. It’s right there in front of you; you know when the pasta is boiling and you can see and even hear the sauce cooking. Also, the two parts of the meal don’t depend on each other: You can make the sauce first — even a month earlier if you like, and freeze it. So you have a lot more choice in terms of the sequencing of tasks, and it is a simpler and more forgiving system. The problem is, many of our systems these days — in business and in life — are a lot more like Thanksgiving than a weeknight spaghetti dinner.

On that note, you have said that a big company is ‘more like a nuclear reactor than an assembly line’. Please explain.

Let’s say you run a small, four-person operation where everyone sits in the same office, so you can easily monitor what they are doing. In a large company, you lose that, and as soon as you can’t see the inner workings of the system itself — in this case, the activities of your employees — complexity rises. To return to the

example of a nuclear power plant’s core, you can’t just walk up to it and measure the temperature manually, or peek into it and say, ‘There is way too much coolant liquid in there!’ Similarly, as an executive in a large company, you can’t monitor every employee and see what they’re doing; you have to rely on indirect indicators like performance evaluations and sales results. Of course, it’s not just about employees: Large companies increasingly rely on complex IT systems for their operations, and externally, they rely on complicated supply chains.

A large company is also a tightly-coupled system because everything it does is so visible, thanks to technology and social media. What used to be small, isolated incidents can now be magnified very easily. If your friendly neighbourhood bakery sells you a stale croissant, you will be disappointed, but you aren’t likely to go on social media and complain about it. Even if you did, there wouldn’t be a massive ripple effect. But if a large visible company like Apple or Air Canada does something upsetting, all it takes is one angry tweet or viral video to explode on social media and create a PR disaster.

We can all do a better job of encouraging dissent and listening to voices of concern about emerging risks.

You believe that social media itself is a complex and tightly coupled system. How so?

Social media is an intricate web, almost by definition. It’s made up of countless connected people with many different views and motives, so it’s hard to know how they will react to a particular message. It is also tightly coupled: Once the genie is out of the bottle, you can’t put it back in, so negative messages spread quickly and uncontrollably. In the book, we discuss some recent PR meltdowns that simply couldn’t have happened without the complex, unforgiving system that social media has become.

Should we be trying to avoid complexity and tight coupling?

Not necessarily. In many cases, we make our systems more complex and tightly coupled for good reasons. Complex systems enable us to do all sorts of innovative things, and tight coupling often arises because we are trying to make a system leaner and more efficient. That is the paradox of progress: We often do these

things for good economic reasons; but, at the same time, we are pushing ourselves into the danger zone without realizing it.

The takeaway shouldn’t be to stop building complex and tightly-coupled systems altogether. That is not even feasible at this point. The takeaway is to think carefully about complexity and tight coupling whenever you are designing a system, expanding it, or setting up new processes. As you set things up, along the way you will have choices to make, and if you are consciously thinking about these dimensions, you can sometimes choose to lower either complexity or tight coupling.

Say you are an executive overseeing a big retail expansion. You probably can’t lower the complexity factor; there will be lots of moving parts and unknowns, no matter what — but you might have some leeway in terms of tight coupling. Rather than choosing a fast-paced, ambitious timetable, you can try a slower and more gradual approach. For instance, you might tackle just a few stores at a time to create more slack. In terms of Perrow’s framework, that means you will still see some surprises due to the complexity of the expansion — but they won’t be as nasty, because you will have time to respond. They will be ‘manageable surprises’.

You believe the financial system is a perfect example of a complex, tightly coupled system. What can be done about that?

In a 2012 interview, Perrow actually said that the financial system exceeds the complexity of any nuclear plant he has ever studied. Unfortunately, I don’t see much opportunity for making it radically simpler or more loosely coupled. Of course, as with healthcare, aviation and our other big systems, we might be able to design things in a way that makes a difference on the margins. But I think finance is an example where the best we can do is learn how to manage within and regulate this very complex and tightly-coupled system.

You believe financial regulators could learn some important lessons from aviation regulators. How so?

In many parts of the world, financial regulation is very punitive and rule-focused. We go after the bad apples, and this approach was probably sufficient at a time when the system was simpler and less tightly coupled. But right now, a simple punitive approach is an impediment to learning about the complex risks emerging in the industry.

In the U.S., the aviation industry dealt with similar challenges quite effectively. Two distinct government agencies oversee the industry: There is the Federal Aviation Administration, which is more enforcement-based and goes after bad actors, but there is also the National Transportation Safety Board (NTSB), which is focused on learning — with an understanding that the system is far too complex for any one pilot or airline to figure out. A lot of what the NTSB does involves learning from accidents, near accidents and small, weak signals of failure. It shares its findings with the entire industry, and all the players recognize that ‘we’re all in this together’. Because the NTSB’s primary mission is safety, rather than rule enforcement, it is also able to consider how the rules themselves contribute to accidents. In addition, the industry uses anonymous reporting to collect and share data on near misses. This is called the Aviation Safety Reporting System, and it’s run by an independent unit at NASA — a neutral party with no enforcement power.

These approaches — the open reporting of mistakes, a trusted third party, and a collective focus on learning — are very much missing from finance. And meltdowns like the failure of Knight Capital — which lost nearly half a billion dollars in half an hour due to a software glitch — will be very difficult to prevent without a more learning-oriented regulatory approach.

Do you have any parting tips for navigating complex and tightly-coupled systems?

Diagnosis is a good first step. The complexity/coupling matrix (see Figure One) can help you figure out if you are vulnerable to surprising meltdowns. Simply knowing that a part of your system, organization or project is vulnerable to nasty surprises can help you figure out where to concentrate your efforts. For example, you might try to simplify the problematic parts of the system, increase transparency or add some slack.

Of course, sometimes it’s impossible to reduce either complexity or tight coupling, but even then, you can navigate the danger zone more effectively. For instance, we can all do a better job of encouraging dissent and listening to voices of concern about emerging risks; and we can learn from weak signals of failure to prevent future major meltdowns. Lastly, we can learn crisis management lessons from people who deal with complex surprises all the time: SWAT teams, ER doctors and film crews, for example.

Research shows that diversity can be very helpful for navigating complex, tightly-coupled systems. How does that work?

Researchers are finding that diverse teams create value for reasons beyond the fact that women, racial minorities and people with different professional backgrounds bring distinct ideas to the table. Diversity also helps because it makes everyone on a team slightly less comfortable — and as a result, more skeptical. When we are in a diverse group, we are more likely to question the group’s assumptions, and that kind of skepticism can be very valuable in a complex and tightly-coupled system. In contrast, when we are surrounded by people who look like us in terms of gender, race and education, we tend to assume that they also

think like us, and that makes us work less hard in both a cognitive and a social sense.

When navigating a simple system, diversity might actually be a net cost, because it can make it harder to coordinate across different groups. But, in a sense, that’s exactly what you want in a complex, tightly-coupled system. You don’t want peoples’ assumptions to remain unstated, or for nagging concerns to go unraised. You want people to voice their concerns — because that’s how we learn. Particular in the danger zone, skeptical voices are crucial, because the cost of being wrong is just too high.

Click here for a PDF version of this article.

András Tilcsik holds the Canada Research Chair in Strategy, Organizations and Society and is an Associate Professor of Strategic Management at the Rotman School of Management. He is the co-author, with Chris Clearfield, of

Meltdown: Why Our Systems Fail and What We Can Do About It (Penguin Press, 2018). Prof. Tilcsik has been named to the Thinkers50 Radar list of the 30 management thinkers in the world most likely to shape the future of organizations.

Rotman faculty research is ranked in the top 10 worldwide by the

Financial Times.

This article appeared in the Spring 2018 issue. Published by the University of Toronto’s Rotman School of Management, Rotman Management explores themes of interest to leaders, innovators and entrepreneurs.

Share this article: